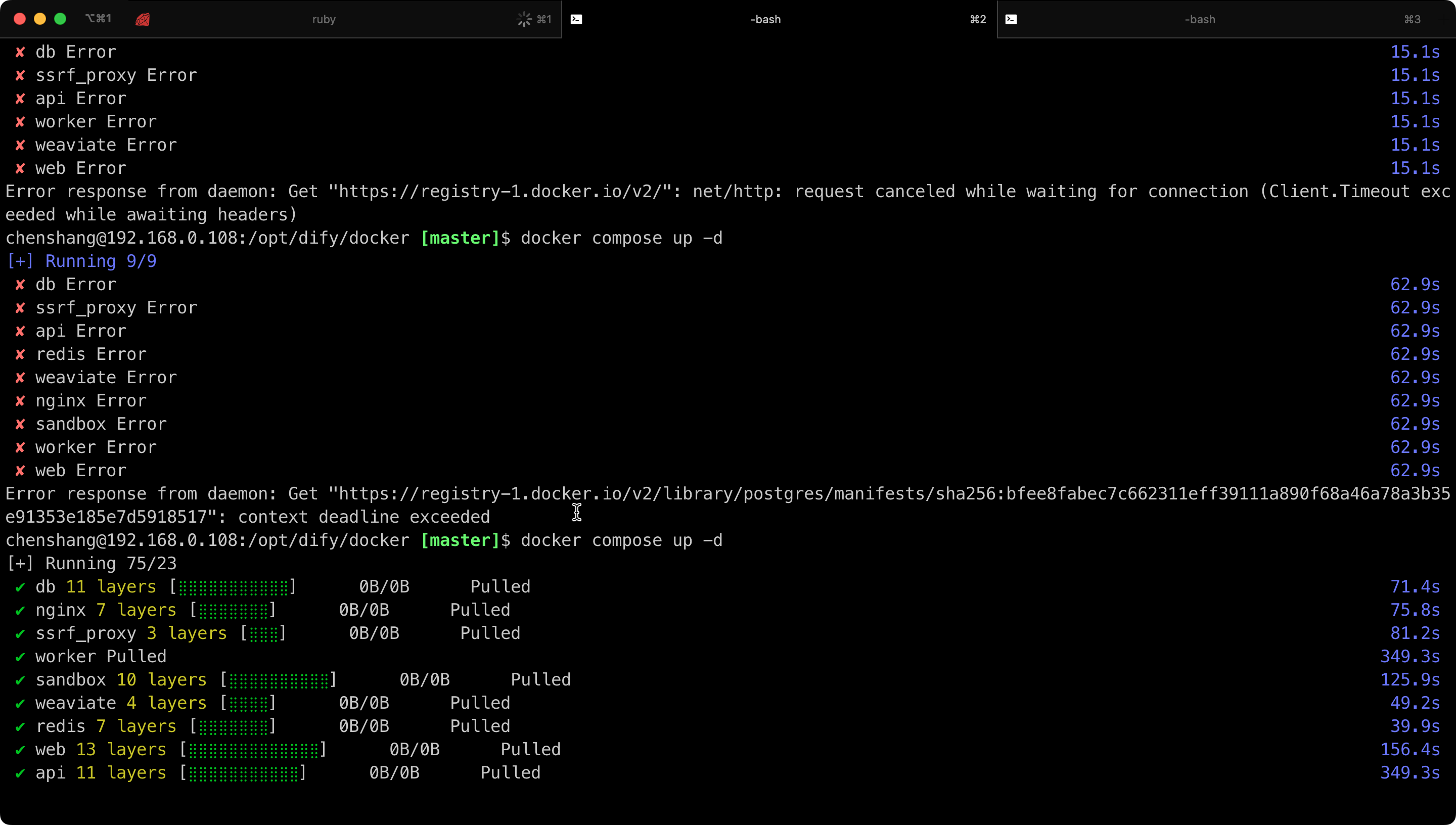

dify镜像下载EOF

添加镜像源,目前下面这些是有效的,不知道以后会怎样,先用这个撑一段时间吧

{

"builder": {

"gc": {

"defaultKeepStorage": "20GB",

"enabled": true

}

},

"experimental": false,

"registry-mirrors": [

"https://9cpn8tt6.mirror.aliyuncs.com",

"https://registry.docker-cn.com",

"https://mirror.ccs.tencentyun.com",

"https://docker.1panel.live",

"https://2a6bf1988cb6428c877f723ec7530dbc.mirror.swr.myhuaweicloud.com",

"https://docker.m.daocloud.io",

"https://hub-mirror.c.163.com",

"https://mirror.baidubce.com",

"https://your_preferred_mirror",

"https://dockerhub.icu",

"https://docker.registry.cyou",

"https://docker-cf.registry.cyou",

"https://dockercf.jsdelivr.fyi",

"https://docker.jsdelivr.fyi",

"https://dockertest.jsdelivr.fyi",

"https://mirror.aliyuncs.com",

"https://dockerproxy.com",

"https://mirror.baidubce.com",

"https://docker.m.daocloud.io",

"https://docker.nju.edu.cn",

"https://docker.mirrors.sjtug.sjtu.edu.cn",

"https://docker.mirrors.ustc.edu.cn",

"https://mirror.iscas.ac.cn",

"https://docker.rainbond.cc"

]

}not shared from the host and is not known to Docker

Error response from daemon: Mounts denied:

The path /opt/dify/docker/volumes/db/data is not shared from the host and is not known to Docker.

You can configure shared paths from Docker -> Preferences... -> Resources -> File Sharing.

See https://docs.docker.com/desktop/mac for more info.dify 想添加本地 ollama 下载安装的模型

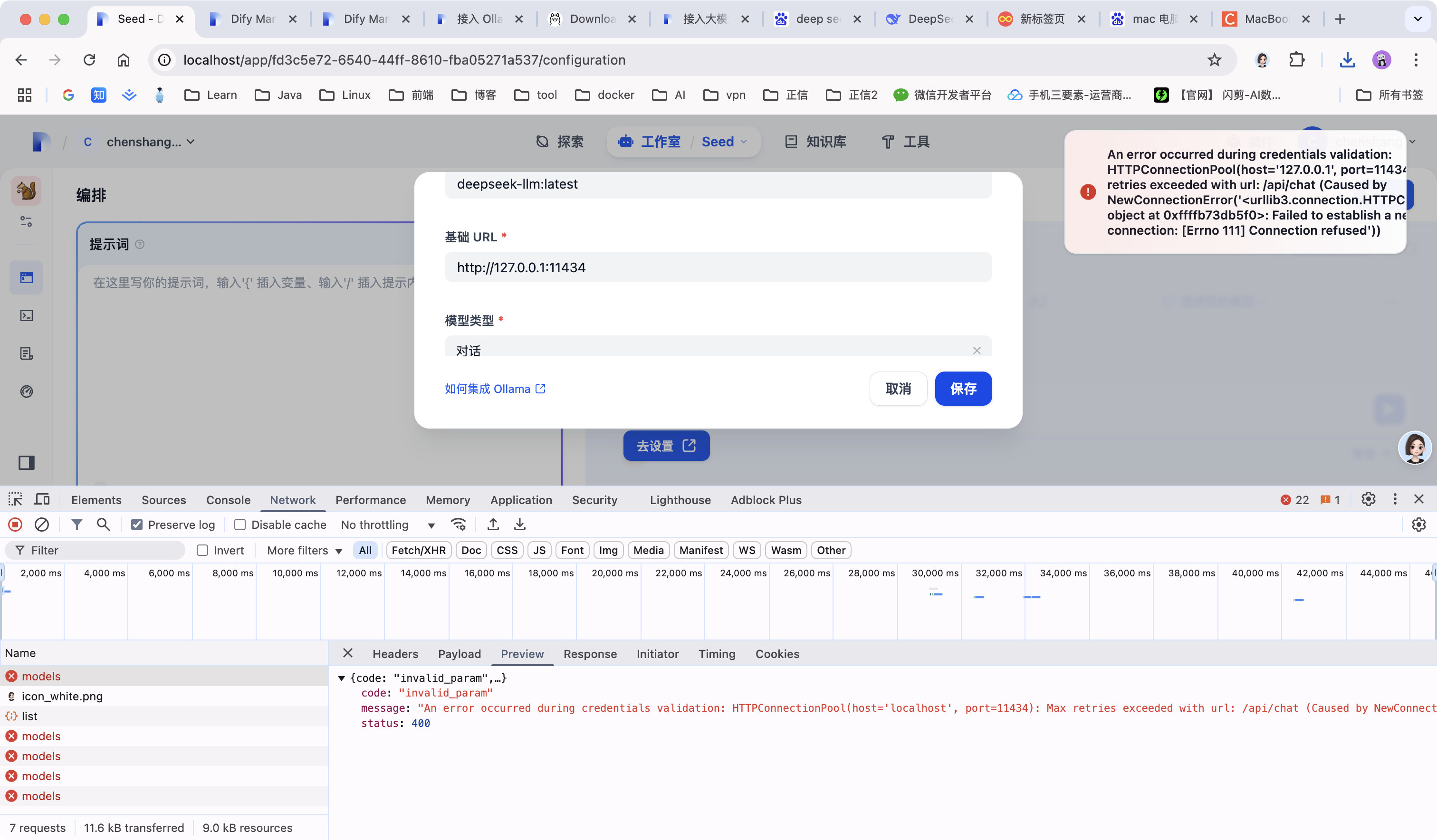

如果你使用 Docker 部署 Dify 和 Ollama,你可能会遇到以下错误:

httpconnectionpool (host=127.0.0.1, port=11434): max retries exceeded with url:/cpi/chat (Caused by NewConnectionError ('<urllib3.connection.HTTPConnection object at 0x7f8562812c20>: fail to establish a new connection:[Errno 111] Connection refused'))

httpconnectionpool (host=localhost, port=11434): max retries exceeded with url:/cpi/chat (Caused by NewConnectionError ('<urllib3.connection.HTTPConnection object at 0x7f8562812c20>: fail to establish a new connection:[Errno 111] Connection refused'))这个错误是因为 Docker 容器无法访问 Ollama 服务。localhost 通常指的是容器本身,而不是主机或其他容器。要解决此问题,你需要将 Ollama 服务暴露给网络。

在 Mac 上设置环境变量

如果 Ollama 作为 macOS 应用程序运行,则应使用以下命令设置环境变量 launchctl

通过调用 launchctl setenv 设置环境变量:

launchctl setenv OLLAMA_HOST "0.0.0.0"重启 Ollama 应用程序。

如果以上步骤无效,可以使用以下方法:

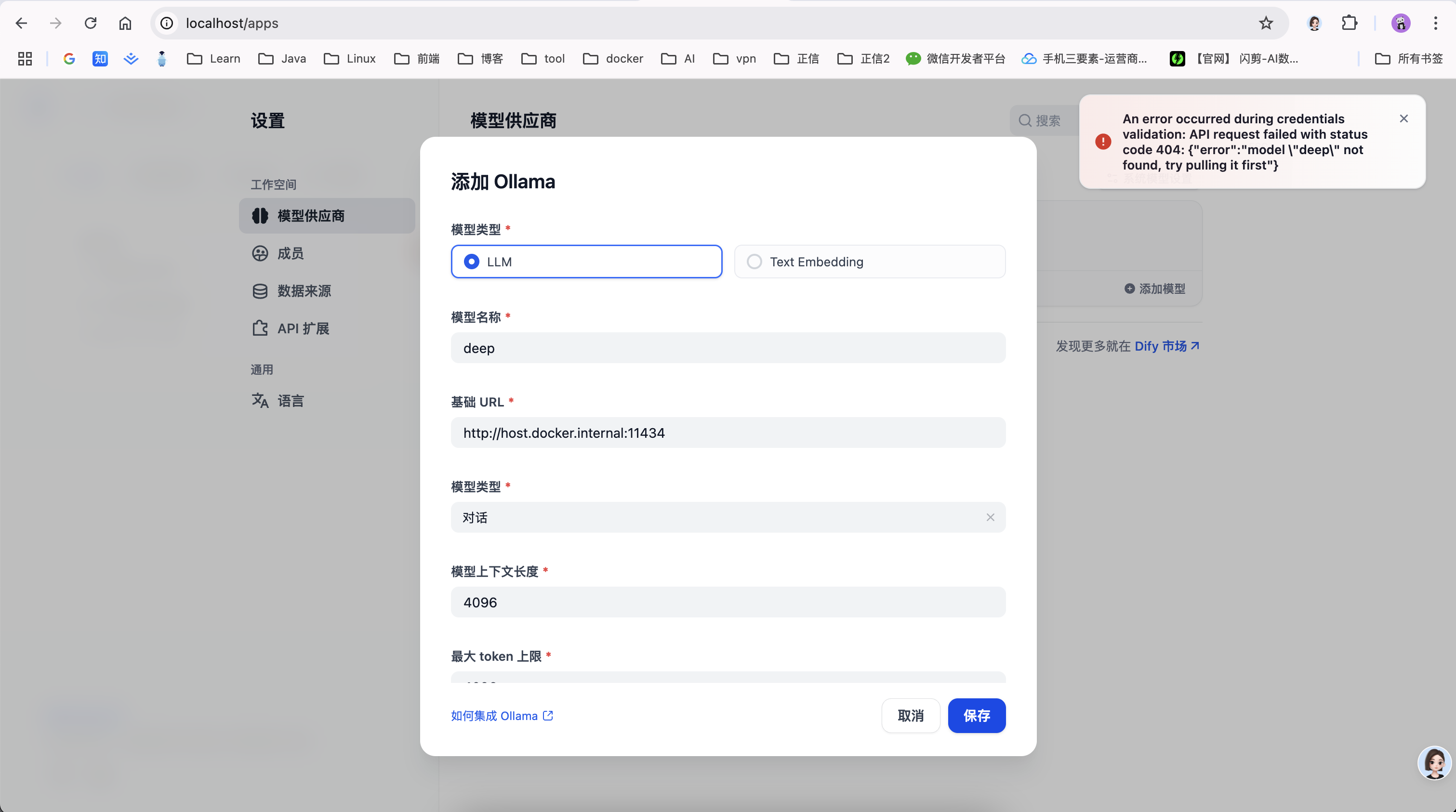

问题是在 docker 内部,你应该连接到

host.docker.internal,才能访问 docker 的主机,所以将 localhost 替换为host.docker.internal服务就可以生效了:

http://host.docker.internal:11434

name不存在

这个是因为我们写的模型的名称不对, 得跟 ollama list 显示的名字一样才行